Designing Trust in Salesforce Data

When data quality isn’t designed into the architecture, Salesforce data rarely breaks dramatically—it erodes quietly.

The Silent Failure

Data quality rarely breaks in a dramatic way. It fails silently—one blank field at a time, one “close enough” picklist value at a time, one integration inserting instead of upserting.

Then one day, a leader asks a question that should be simple:

“What’s our pipeline looking like next quarter?”

The dashboard loads. The room goes quiet.

Someone says, “This feels high.” Someone else says, “This feels low.”

In that moment, the conversation shifts—from strategy to suspicion.

This is why I treat data quality as architecture, not hygiene. In Salesforce, degraded data doesn’t just create messy records—it creates unreliable decisions. And unreliable decisions compound into organizational paralysis.

Why Data Quality Is a Business Problem (Not a CRM Chore)

Salesforce serves as the system of record for revenue, customers, and growth in most organizations. When data inside it degrades, the effects don’t stay confined to admins or report folders. They surface as:

- Forecasts that feel “directional,” not defensible — sales leadership hedges every projection

- Marketing spend aimed at the wrong segments — campaigns miss their mark despite good creative

- Sales capacity planning driven by intuition — resourcing decisions based on gut feel, not evidence

- Leaders quietly exporting data “just to double-check” — trust erodes one spreadsheet at a time

What makes data quality especially insidious is its silence. Salesforce keeps running. Automations still fire. Reports still load. But trust erodes slowly until teams hedge every decision, and strategic conversations devolve into debates about data provenance.

This is why data quality deserves architectural intent—not just cleanup campaigns. It protects three assets that matter profoundly to the business:

- Decision quality — accurate reporting and credible forecasting

- Operational efficiency — less rework, fewer exceptions, cleaner handoffs

- Customer experience — consistent identity and coherent communication

What “Data Quality” Actually Means in Salesforce

In practice, high-quality Salesforce data satisfies six conditions:

Accuracy — reflects reality as it exists

Completeness — contains what’s needed when decisions are made

Consistency — represents the same concepts the same way across records

Validity — conforms to expected formats and business rules

Uniqueness — avoids duplicate representations of the same entity

Timeliness — stays reasonably current relative to operational needs

Teams often attempt to enforce all six with validation rules alone. That rarely works at scale. Effective data quality is layered—designed into the platform from data model to governance framework.

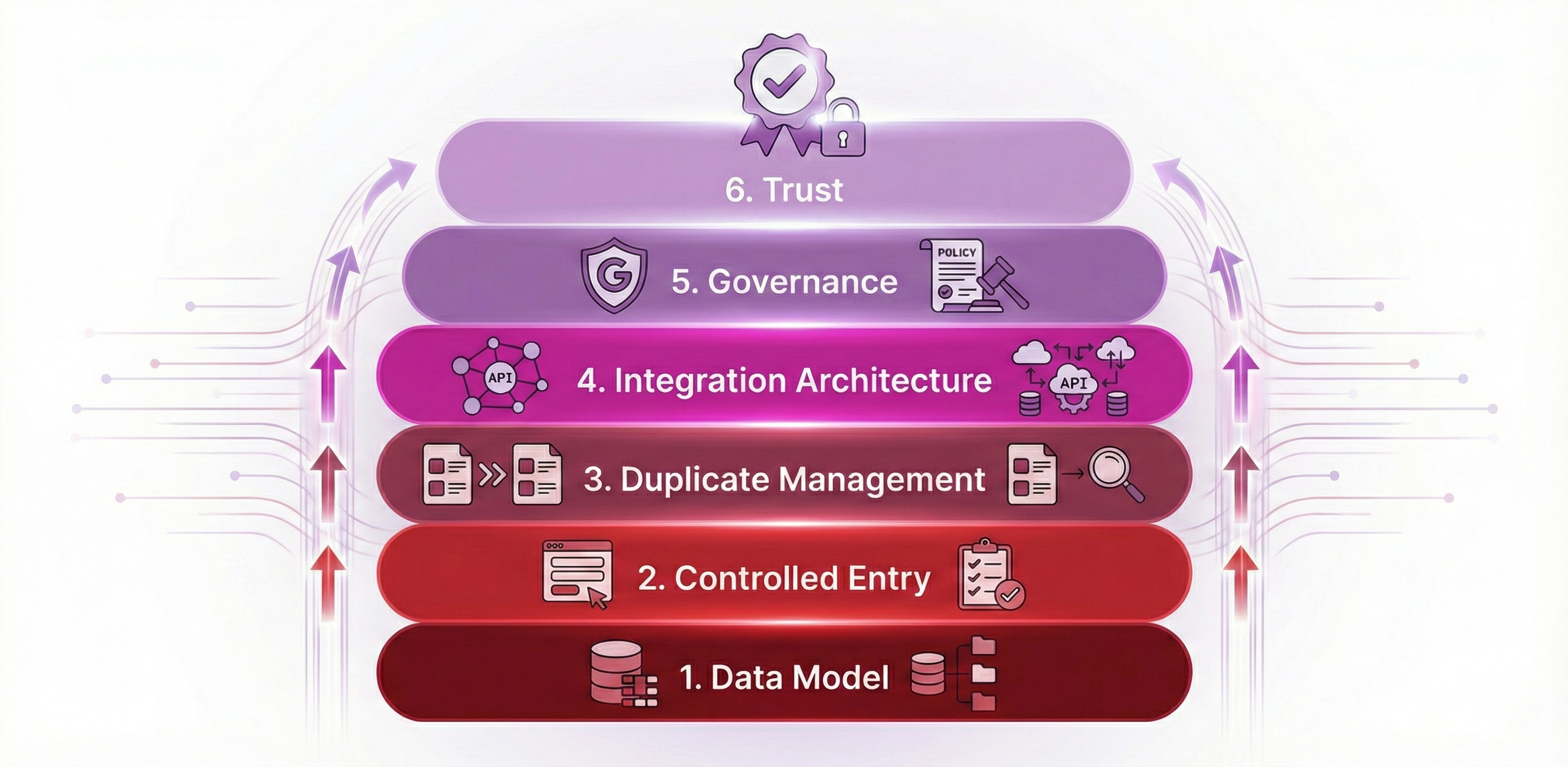

The Layered Architecture of Salesforce Data Quality

Think of data quality as defense in depth. Each layer catches what the previous one cannot. No single control is sufficient; the system succeeds through coordinated resilience.

Figure 1: The six layers of Salesforce data quality architecture

Figure 1: The six layers of Salesforce data quality architecture

Layer 1: The Data Model (Where Quality Begins—or Collapses)

Before a single record exists, your data model has already made quality decisions that will echo for years.

Architectural questions that matter more than most teams admit:

- What does an Account represent—company, location, division, household?

- How and when does identity persist from Lead to Contact to Opportunity?

- Are Person Accounts a strategic fit, or a tactical shortcut that fragments reporting?

- Are custom objects modeling real business processes—or compensating for unclear ownership?

When these answers remain fuzzy, Salesforce still “works,” but quality erodes invisibly: duplicated identities, fragile rollup summaries, and reports that require interpretive caveats.

Layer 2: Controlled Data Entry (Where Most Quality Is Won—or Lost)

Most Salesforce data enters through the user interface. Field design and entry controls are therefore decisive in shaping long-term quality outcomes.

Required Fields—Used Intentionally

Required fields are powerful, but blunt. When everything is required upfront, users either abandon records or enter placeholder values to satisfy validation. Neither outcome serves data quality.

A better approach is progressive enforcement:

- Early stages → capture what’s needed to proceed to the next step

- Middle stages → gather what’s needed for handoffs and routing

- Later stages → enforce what’s needed to forecast, approve, or execute

Picklists Over Free Text

Any field that feeds reports, automation, or integrations should be structured. Free text feels flexible during data entry; at scale, it fragments semantic meaning and creates analytical chaos.

Picklists, global value sets, and field dependencies enforce consistency—ensuring that when the business says “Enterprise,” it means the same thing in every context, every time.

Validation Rules as Encoded Policy

Validation rules are business policies written in logic. The best ones:

- Trigger at the right moment in the workflow

- Explain why the rule exists in plain language

- Prevent expensive downstream errors before they propagate

Error messages should read like product microcopy, not system logs. When users understand the “why,” compliance rises without enforcement overhead.

Layer 3: Duplicate Management (Identity Is Everything)

If data quality has a backbone, it’s identity. Duplicates fracture reality—splitting revenue attribution, fragmenting customer history, and undermining every downstream analysis.

Salesforce provides two key mechanisms:

- Matching Rules — how potential duplicates are identified

- Duplicate Rules — what happens when they’re found

A scalable strategy I’ve seen work:

- Start with alerts, not blocks — observe patterns before enforcing restrictions

- Analyze where duplicates originate — web forms, imports, integrations

- Tune matching logic — balance exact vs. fuzzy matching, adjust field weighting

- Block selectively where the business cost is highest — protect revenue objects more strictly

Duplicates rarely come from user negligence alone. They emerge from web-to-lead forms, data imports, and integrations that lack stable external keys.

Layer 4: Integration Architecture (Where Quality Scales—or Explodes)

Long-term data quality failures are often integration failures in disguise. When Salesforce connects to other systems, architectural decisions determine whether quality compounds or collapses.

Two decisions matter most:

Source of Truth Per Attribute

Every critical field needs an owner: where it originates, where it’s allowed to change, and where it’s consumed as read-only. Without this clarity, conflicts multiply and trust evaporates.

Identity Strategy Across Systems

If integrations insert records without stable external IDs, Salesforce will create duplicates indefinitely. No amount of manual cleanup will solve a structural problem.

External IDs, upserts, and canonical identity mapping are non-negotiable at scale.

Layer 5: Governance That Enables—Not Slows—Delivery

Governance succeeds when it’s lightweight, clear, and actually followed. At minimum, it should answer:

- Who owns which data domains?

- What standards apply across the organization?

- How are exceptions handled and escalated?

- How is quality measured and reported?

A simple operating model that works:

- Data Owner — defines business meaning and usage policies

- Data Steward — monitors quality and coordinates remediation

- Platform Owner — implements technical enforcement

Publish a concise “Data Rules of the Road” covering required fields by stage, duplicate handling protocols, and change request procedures. Keep it short enough that people actually read it.

A Salesforce-Aligned Implementation Playbook

If I were implementing this architecture in a real organization, I’d sequence it in three phases:

Phase 1 — Diagnose (2–3 weeks)

- Profile completeness on critical objects (Accounts, Contacts, Opportunities)

- Measure duplicate rates and identify concentration patterns

- Map all entry points (UI, imports, APIs, integrations)

Phase 2 — Stabilize (4–6 weeks)

- Introduce picklists and dependencies where free text dominates

- Implement progressive validations tied to business stages

- Enable duplicate rules in alert-first mode

- Fix integration upserts with External IDs and proper key matching

Phase 3 — Sustain (ongoing)

- Assign clear ownership for each data domain

- Monitor with a data quality dashboard tied to business KPIs

- Review monthly with business stakeholders, not just admins

This approach avoids turning data quality into a perpetual cleanup program. It builds quality into the system’s operating rhythm.

The Business Impact: What These Layers Actually Protect

When these five architectural layers work together, they deliver tangible business outcomes that extend far beyond clean data:

From Layer 1: Clean Data Models

A well-designed data model reduces downstream cost through simpler reporting, fewer data transformations, and elimination of debates about “what the number really means.” Teams spend less time reconciling conflicting definitions and more time acting on insights.

From Layer 2: Controlled Entry

Progressive enforcement keeps user adoption high while protecting decision-critical data at the moment it matters. Sales teams don’t abandon records due to excessive requirements, yet forecasts remain reliable because key fields are complete when they need to be.

From Layer 2: Structured Fields

Consistent segmentation through picklists enables trustworthy analytics without constant normalization and cleansing. Marketing can confidently target enterprise accounts knowing the definition is consistent across all records and systems.

From Layer 2: Smart Validation

Less rework, safer automation, and more credible reporting emerge when validation rules prevent errors before they propagate. Support teams spend less time fixing data issues and more time serving customers.

From Layer 3: Identity Management

Clean identity protects revenue attribution, enables coherent customer experiences, and preserves executive trust in metrics. When every customer has exactly one record, win rates and pipeline forecasts become defensible rather than directional.

From Layer 4: Integration Design

Clean integrations prevent exponential cleanup costs and preserve consistency across the entire customer lifecycle. Data flows seamlessly between marketing automation, CRM, and support systems without creating duplicates or conflicts.

From Layer 5: Lightweight Governance

Fewer workarounds, faster change velocity, and sustained trust emerge as the organization scales. Teams don’t route around the system because governance enables rather than blocks their work.

The Real Choice: Prevention or Taxation

Salesforce provides the tools. What it doesn’t decide is when you pay for data quality.

You can invest upfront—in architecture, guardrails, and clarity—or you can pay later through cleanup initiatives, reporting rework, and lost momentum. The bill always comes due.

Cleanup costs don’t show up as system failures. They show up as organizational hesitation—leaders double-checking numbers, teams hedging decisions, and opportunities missed while data is debated instead of acted upon.

Closing Thought

Clean data is not the output of a successful Salesforce project. It is the input to every project that follows.

When data quality is designed into the architecture from the beginning, Salesforce becomes what it was meant to be:

- A source of truth that leaders reference confidently

- A platform for decisive action, not cautious qualification

- A system the business actually trusts

And when that trust exists, strategy conversations stop sounding like debates about data provenance—and start sounding like decisions about market opportunity.